OpenAI O3 In-Depth Review: A Focused Step Toward General Intelligence

OpenAI’s O3 model, released in April 2025, demonstrates significant progress in reasoning, autonomous tool use, and visual understanding—positioning itself as a practical step toward general-purpose intelligence.

1. Introduction: O3, a Quietly Released Model

In April 2025, OpenAI quietly released a new model named O3. There was no official blog post, no public launch event—O3 simply appeared in the ChatGPT interface and the OpenAI API model list, and has since been gradually integrated into platforms like HuggingChat and Poe.

Despite the lack of detailed technical documentation, OpenAI has listed O3 in its official model catalog and confirmed its support for both text and image inputs. Through API exploration and real-world usage, developers and early users have identified several key features of the model: strong reasoning capabilities, response speed comparable to GPT-3.5, support for basic visual input, and a different pricing structure compared to the GPT-4 family.

2. Model Highlights: Core Capabilities of OpenAI’s O3

Based on information from OpenAI’s model reference page and public testing by early users, the O3 model offers several noteworthy features:

Documented and Community-Verified Capabilities

Enhanced Text Understanding and Reasoning:

O3 demonstrates improved consistency and accuracy over GPT-3.5 in handling complex text-based tasks such as mathematical reasoning, code generation, and logical analysis. Multiple developers have confirmed its more stable performance in chain-of-thought (CoT) reasoning scenarios through public projects and testing.

Image Input Support (via API):

According to OpenAI’s documentation, O3 supports image input through the Vision API, allowing developers to upload and analyze images. Current capabilities include OCR text recognition, image captioning, and basic visual-text integration tasks.

Faster Response Than GPT-4, Comparable to GPT-3.5:

Users report that O3 responds significantly faster than GPT-4, making it a suitable choice for latency-sensitive applications. This performance has been corroborated through both OpenAI’s pricing documentation and community API benchmarks.

Accessible via OpenAI API, Designed for Practical Use:

O3 is accessible via the OpenAI API and supports a wide range of tasks. According to OpenAI’s pricing page, it costs $10.00 per million input tokens, $2.50 for cached input, and $40.00 per million output tokens—positioning it as a high-performance model suitable for latency-sensitive applications.

Although OpenAI calls O3 its most powerful reasoning model, it has not disclosed details about its architecture, size, or training data. Its connection to previous models like GPT-4 or GPT-4o remains unclear, and any claims of lineage are speculative.

3. Real-World Performance: What OpenAI O3 Can Actually Do

1. Reasoning and Problem Solving

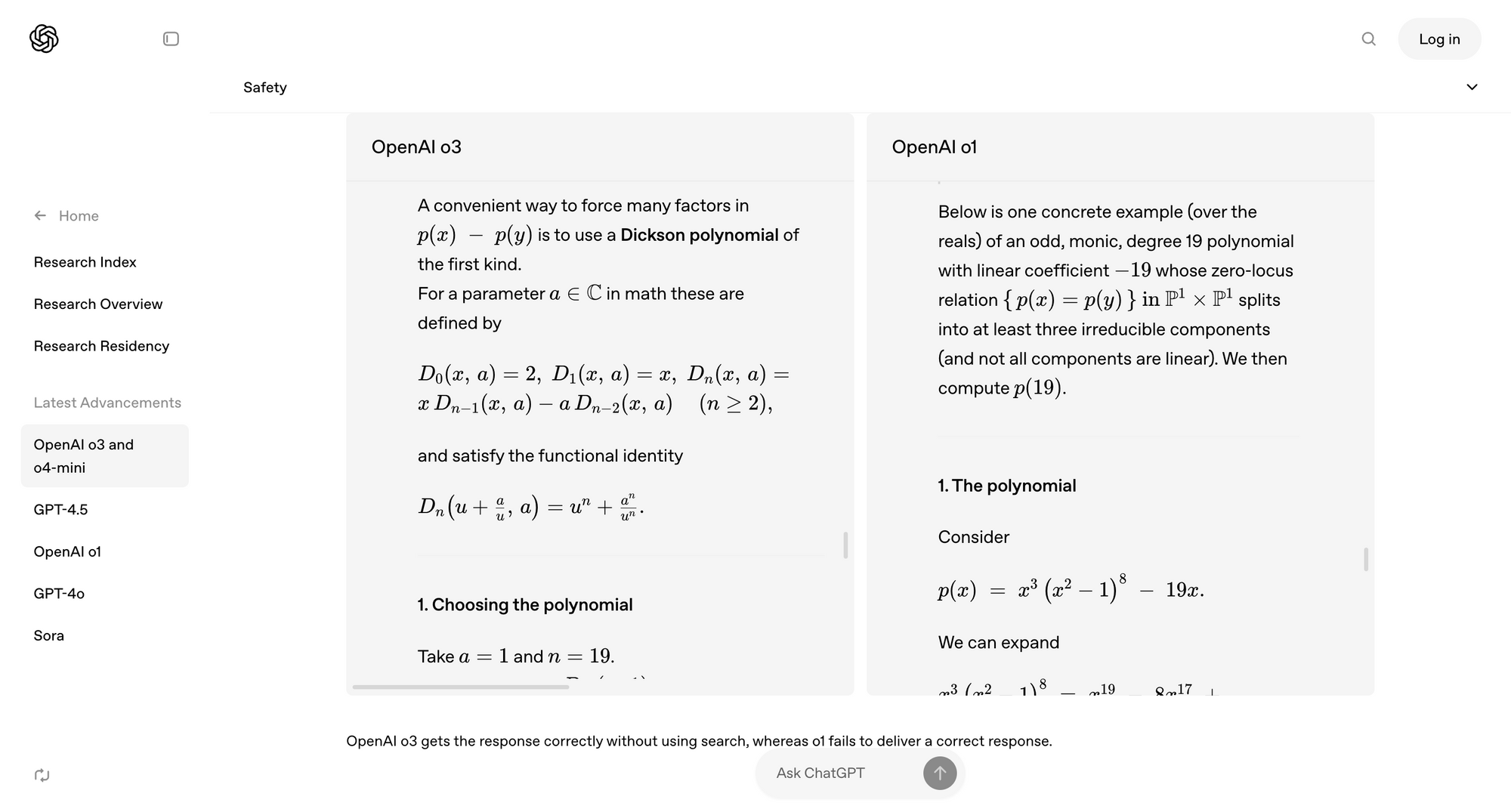

O3 employs advanced “simulated reasoning” techniques, enabling it to tackle complex problems and deliver in-depth analysis. In practical testing, the model has demonstrated strong multi-step reasoning skills, particularly in scientific and mathematical domains.

2. Visual and Image Understanding

O3 shows significant improvements in visual reasoning. It can analyze complex charts, scientific illustrations, and extract critical insights from uploaded images. This expands the model’s usefulness across educational, technical, and professional domains.

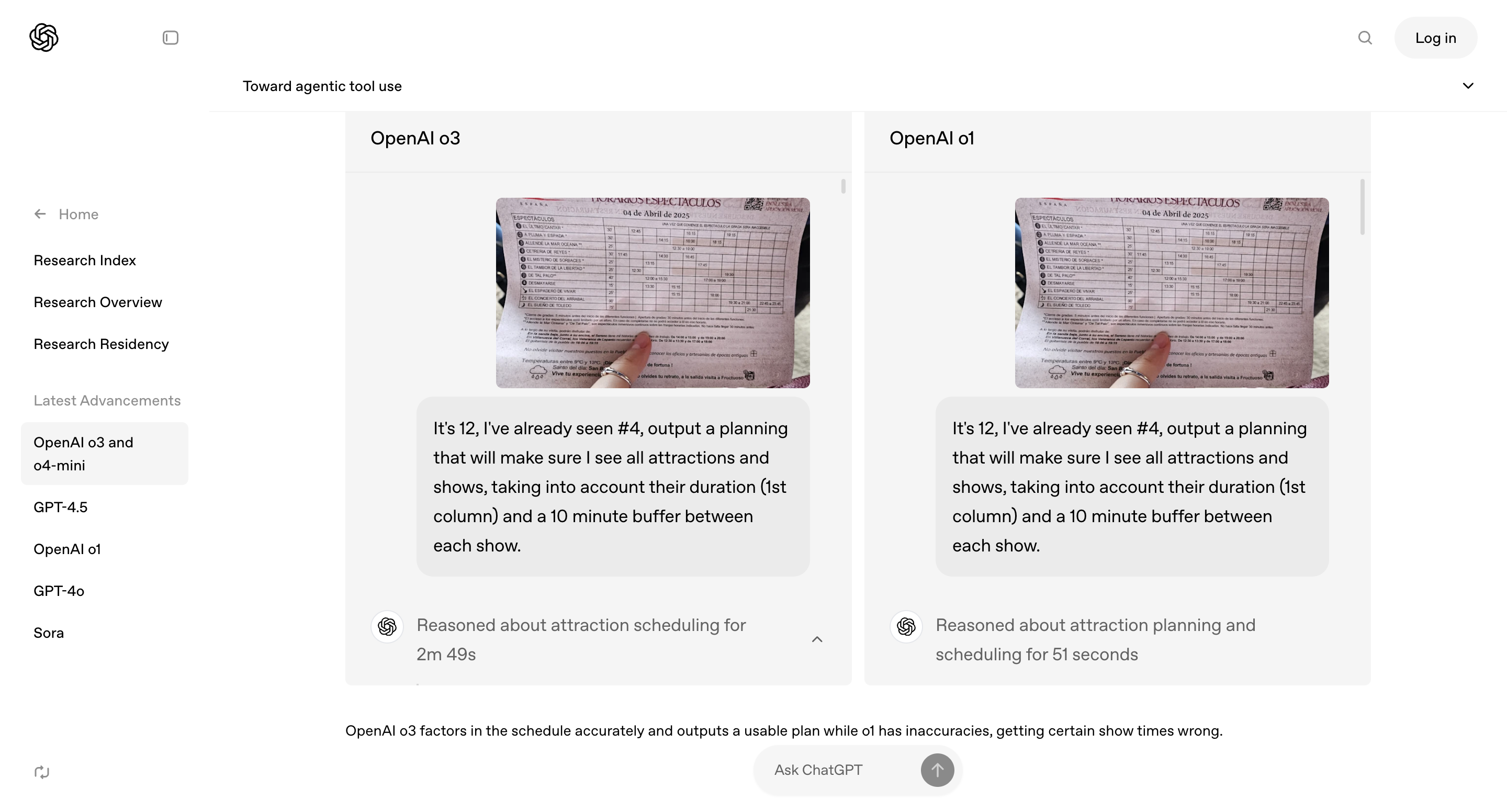

3. Tool Use and Autonomous Capabilities

O3 is the first reasoning model to demonstrate autonomous tool usage. It can independently decide when and how to utilize tools like web browsing, Python programming, and file handling. This allows O3 to search for information, write and execute code, and interpret various file formats — all without human hand-holding.

OpenAI’s official account (@OpenAI) posted:

“For the first time, our reasoning models can agentically use and combine every tool within ChatGPT, including web search, Python, image analysis, file interpretation, and image generation.”

Introducing OpenAI o3 and o4-mini—our smartest and most capable models to date.

— OpenAI (@OpenAI) April 16, 2025

For the first time, our reasoning models can agentically use and combine every tool within ChatGPT, including web search, Python, image analysis, file interpretation, and image generation. pic.twitter.com/rDaqV0x0wE

4. Comparison with Previous Models: The Progress and Trade-offs of O3

1. Leap in Reasoning Ability

Compared to its predecessor, O1, O3 shows a significant improvement in handling complex problems.

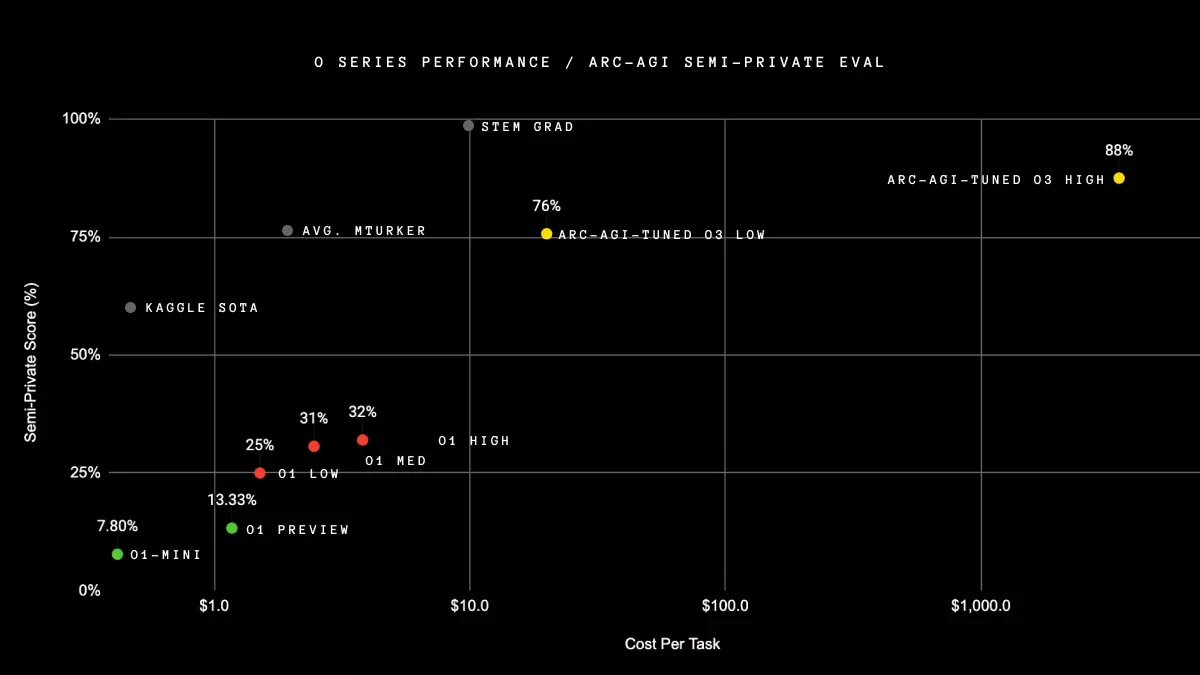

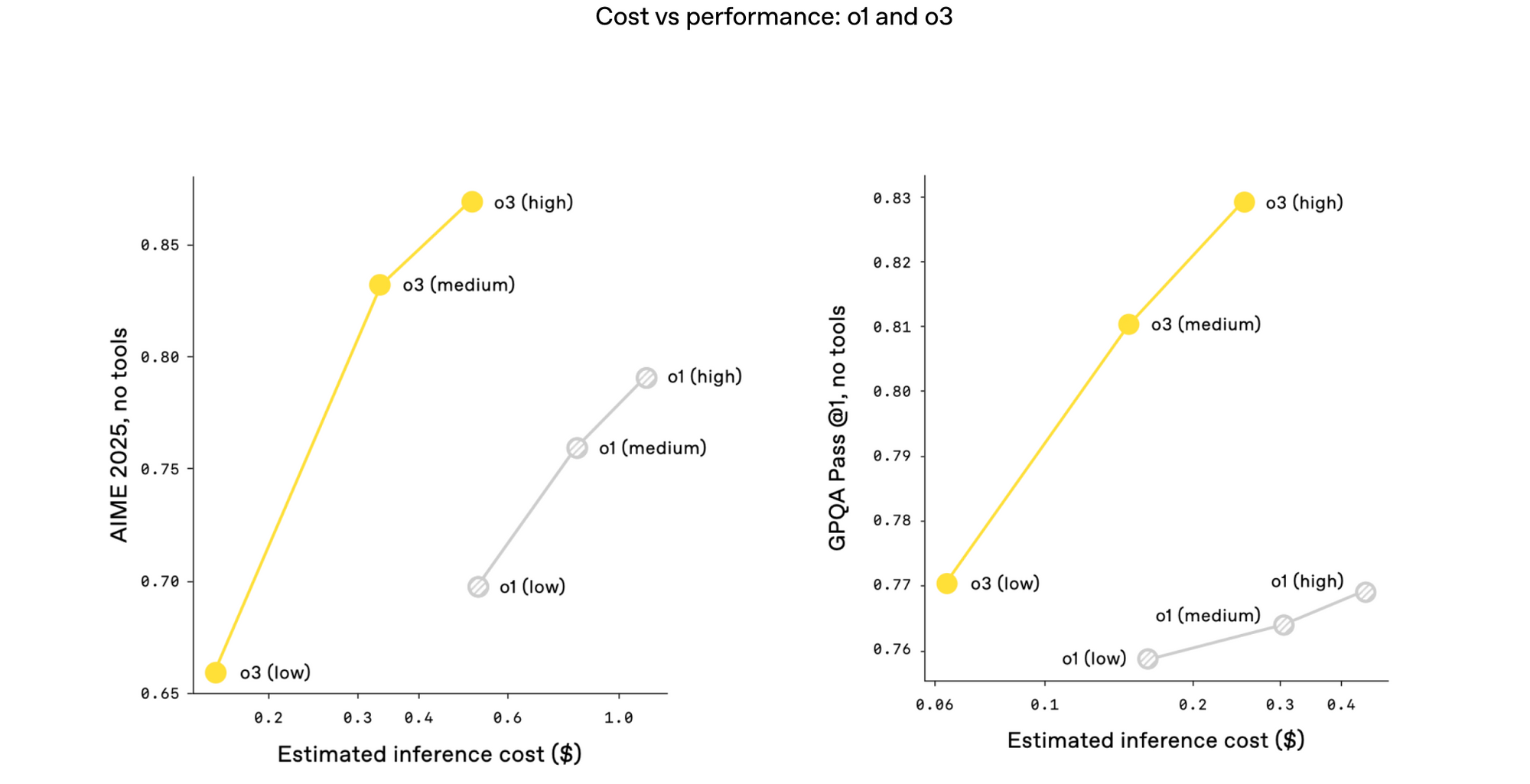

2. Balancing Resource Consumption and Response Speed

Of course, greater capabilities come with a higher computational cost. O3 requires more resources to operate, resulting in slightly slower response times. However, OpenAI has provided multiple versions to suit different user needs, particularly with the O3-mini series offering more flexibility.

The O3-mini offers three levels of reasoning intensity:

Low: Fastest response, ideal for tasks that require speed but less complex reasoning.

Medium: A balanced approach between speed and capability.

High: Best for deep analysis tasks that require the highest reasoning power but may have longer response times.

3. Diverse Application Scenarios

Based on different reasoning levels and response speeds, the O3 series can be adapted to a wide range of use cases:

O3 Full Version: Suitable for scientific research, advanced data analysis, and complex applications that require multiple toolchains.

O3-mini-high: Ideal for content creation, educational tutoring, strategic assistance, and other scenarios where reasoning power is key.

O3-mini-medium/low: Best suited for everyday assistants, customer service, basic information queries, and tasks where response speed is a priority.

5. Expert Opinions and Community Feedback: The Real-World Performance of OpenAI O3

1. Expert Opinions

In an interview with Business Insider, OpenAI's CEO Sam Altman discussed the challenges the company faces regarding AI model naming. He mentioned that there are plans to reform the naming system by summer 2025 to streamline the product line and improve user experience. When talking about new models like O3 and O4-mini, Altman acknowledged that these names might cause some confusion but emphasized that these models represent significant breakthroughs in reasoning AI.

2. Popular Twitter Commentary

Zvi Mowshowitz (@TheZvi) emphasized that O3's greatest highlight lies in its tool-using capabilities:

"The ability to use tools, connect them together, and sustain that process is the standout feature of O3."

At the same time, he also pointed out the model's shortcomings:

"By today’s standards, it hallucinates at an alarmingly high rate and exhibits a troubling level of deceptive behavior."

— Zvi Mowshowitz (@TheZvi) April 18, 2025

3. Community Feedback

On the r/OpenAI subreddit, user feedback about the O3 model has been mixed. One user commented:

"O3 feels like a stripped-down version of its predecessors. While it is smarter and more to the point in some areas, it falls short in others." This reflects some users’ challenges in adapting to the new model's features.

o3 is disappointing

by u/Atmosphericnoise in OpenAI

Conclusion: Is O3 the Closest We’ve Been to AGI?

First, it’s important to clear up a potential misunderstanding: O3, released by OpenAI in April 2025, is an entirely new model, not last year’s GPT-4o (also known as GPT-4 Omni). While their names might sound similar, they are fundamentally different. O3 is not just an upgrade of GPT-4 but represents a new attempt that brings OpenAI closer to achieving Artificial General Intelligence (AGI).

The breakthroughs O3 brings aren’t just about being "faster" or "more accurate"—it’s about a shift in underlying thinking, showing intelligence that seems more "human-like":

- It can handle complex problems, break them down systematically, analyze them, and offer solutions, making it seem as if it's truly "thinking."

- It can autonomously decide when to use tools to help complete tasks, without needing to be directed—finding methods on its own.

- It understands the relationship between language, images, and sounds, functioning as a truly intelligent entity that comprehends the world, rather than simply interpreting images and transcribing sounds.

- Even more impressively, it can learn and optimize its actions through interaction, showing a type of learning intelligence, even if it doesn’t have full “consciousness.”

These abilities have led many to start reconsidering a crucial question: Is AGI truly around the corner?

Of course, we don’t need to rush to conclusions. While O3 is powerful, it is still not an “all-powerful” intelligent entity. It doesn’t set its own goals, understand emotions, nor can it fully handle all complex real-world situations. It remains a tool—an incredibly advanced one, but still lacking subjective consciousness.

Final Thoughts

O3 may not be the endpoint of AGI, but it could very well be the turning point—a marker that makes us realize that AGI is truly near. It reminds us that the future of AI isn’t a far-off fantasy; it’s an evolving reality. The real challenge now lies in how we guide this evolution to ensure it is safe, controllable, and beneficial for humanity.

With O3, we’re not just using AI—we’re beginning to seriously coexist with it.

It’s also worth noting that O3's “superior performance” has not been without controversy. According to a TechCrunch report from April 20, 2025, research organization Epoch pointed out that O3 scored only about 10% on certain benchmark tests, far from the high marks claimed by OpenAI. This reminds us to maintain a critical perspective when evaluating any AI model, using multiple data sources rather than just listening to company claims.

We’re also experiencing this shift firsthand. As an AI tool aggregator, Monica has already integrated OpenAI’s latest O3 model, hoping to provide users with smarter, more natural, and even “thoughtful” AI experiences in the near future. The future of AI isn’t just about being a tool—it’s about being a partner you can collaborate with and trust. We’ll continue to monitor AI's evolution and strive to bring this cutting-edge capability to every ordinary user.